Automatic detection of non-perfusion areas in diabetic macular edema from fundus fluorescein angiography for decision making using deep learning

- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT Vision loss caused by diabetic macular edema (DME) can be prevented by early detection and laser photocoagulation. As there is no comprehensive detection technique to recognize NPA,

we proposed an automatic detection method of NPA on fundus fluorescein angiography (FFA) in DME. The study included 3,014 FFA images of 221 patients with DME. We use 3 convolutional neural

networks (CNNs), including DenseNet, ResNet50, and VGG16, to identify non-perfusion regions (NP), microaneurysms, and leakages in FFA images. The NPA was segmented using attention U-net. To

validate its performance, we applied our detection algorithm on 249 FFA images in which the NPA areas were manually delineated by 3 ophthalmologists. For DR lesion classification, area under

the curve is 0.8855 for NP regions, 0.9782 for microaneurysms, and 0.9765 for leakage classifier. The average precision of NP region overlap ratio is 0.643. NP regions of DME in FFA images

are identified based a new automated deep learning algorithm. This study is an in-depth study from computer-aided diagnosis to treatment, and will be the theoretical basis for the

application of intelligent guided laser. SIMILAR CONTENT BEING VIEWED BY OTHERS SEGMENTATION OF MACULAR NEOVASCULARIZATION AND LEAKAGE IN FLUORESCEIN ANGIOGRAPHY IMAGES IN NEOVASCULAR

AGE-RELATED MACULAR DEGENERATION USING DEEP LEARNING Article Open access 01 July 2022 AUTOMATED SEGMENTATION OF MACULAR EDEMA FOR THE DIAGNOSIS OF OCULAR DISEASE USING DEEP LEARNING METHOD

Article Open access 28 June 2021 DEEP-LEARNING-BASED AI FOR EVALUATING ESTIMATED NONPERFUSION AREAS REQUIRING FURTHER EXAMINATION IN ULTRA-WIDEFIELD FUNDUS IMAGES Article Open access 17

December 2022 INTRODUCTION Diabetic retinopathy (DR) is one of the leading causes of preventable vision loss worldwide1. When the macula is affected in diabetic patients, it leads to

diabetic macular edema (DME) which is sometimes also considered as vision-threatening and advanced stage of DR. Automated DR screening using artificial intelligence based on retinal images

was not only found to be accurate, but also cost effective2,3. The Early Treatment of Diabetic Retinopathy Study (ETDRS) established the definition of clinically significant macular edema

(CSME) for which grid/focal laser photocoagulation can significantly reduce the risk of visual acuity (VA) loss4. A standard set of definitions that describes the severity of retinopathy and

macular edema are critical in clinical decision making. Recently, anti-vascular endothelial growth factor (VEGF) therapies were proved more effective than laser photocoagulation and other

treatments5,6. Laser photocoagulation should be modified to be more effective. To make an intelligent treatment decision for DME, it needs to align planned treatment locations precisely that

are defined on the FFA images and actual sites on retina during the treatment. DR lesions detection using fundus images and FFA images, as non-perfusion (NP) areas and microaneurysms, is

crucial for planning laser treatment locations7. This is a difficult job due to the fact that there is a large variation in the overall size, shape, location, and intensity of the retinal

pathologies8. There are some studies on automated classification of retinal pathologies in fundus images for DR screening, however few studies have published an automated method to detect

the lesions in FFA images9,10. Annotating lesions on FFA images is costly. Automatic lesion detection of retinal pathologies in FFA images will aid clinicians in both treatment and

referrals. Non-perfusion areas (NPA) of the retina are associated with the development of vascular occlusion or capillary closure. It is obvious that early detection of small isolated NPA

for DR patients is crucial. NPA is one of the primary lesions occurring in DR, the grading of its severity is still based on indirect signs of ischemia detected on color fundus photographs

(CFPs) in daily routine practice. A series of automatic DR lesions detection algorithms based on CFPs provided indirect information on the status of retinal capillary perfusion. Classical

machine learing method was used for detection and classification of exudates and cotton wool spots in CFPs11. Later, a deep learning system was able to detect lesions of referable DR in CFPs

12. Automatic detection of NPA not only provide a fast and consistent approach but also present a cost-effective and reliable methodology compared to analysis performed by clinician

observers13. In this study, we identify lesions and segmentation of non-perfusion areas based on deep learning using FFA images, which is significance for DR and DME management. On the basis

of the previous work, this study is an in-depth study from computer-aided diagnosis to treatment. The achievements of this study will be the theoretical basis for the application of

intelligent laser model into clinical translational medicine. METHODS DATASET 221 patients (435 eyes) with DME (age range 31–81 years) who visited the Eye Center at the Second Affiliated

Hospital of Zhejiang University, during a period of 27 months from August 2016 to October 2019, were recruited to receive a FFA using tabletop systems HRA-II at 30° (Heidelberg, Germany), at

768 × 768 pixels. Pupil dilation was achieved with topical 1.0% tropicamide. Subjects were excluded from the study if they had a medical condition that prevented dilation or had overt media

opacity. All participants signed an informed consent form prior to participation in the study. This study was approved by the Medical Ethic Committee of the Second Affiliated Hospital,

Zhejiang University, and it was compliant with Declaration of Helsinki. ANNOTATION OF LESIONS A local database of FFA images was annotated independently by 3 ophthalmologists to classify the

images containing important clinical findings as microaneurysms, non-perfusion (NP) regions and leakage. Manually segmented ground-truth images were required to assess NP regions detection

performance of the algorithm. A binary map for each FFA image was created based on the consensus of 3 ophthalmologists (Fig. 4). Three thousand, and fourteen images mutually agreed upon for

the accuracy of their clinical label were used. Of these, 2,412 were included in the training set and 602 in the testing set, 482 were included in the validation set. The dataset contained

2,801 images of microaneurysms, 1565 images of NP regions, 579 images of leakage (Table 1). IMAGE PREPROCESSING The FFA images were normalized for contrast using enhancement algorithm14,

which is not only beneficial for further processing by computer algorithms, but also for a better evaluation of the fundus by clinicians. Contrast is then enhanced in the luminosity channel

of L*a*b* color space by CLAHE (contrast limited adaptive histogram equalization). The CLAHE divides the image into small regions called tiles; the histogram on each tile is equalized so

that local contrast is enhanced. Data augmentation was then performed using horizontal and vertical filliping, rotating and adding Gaussian noise to balance the amounts of images of each

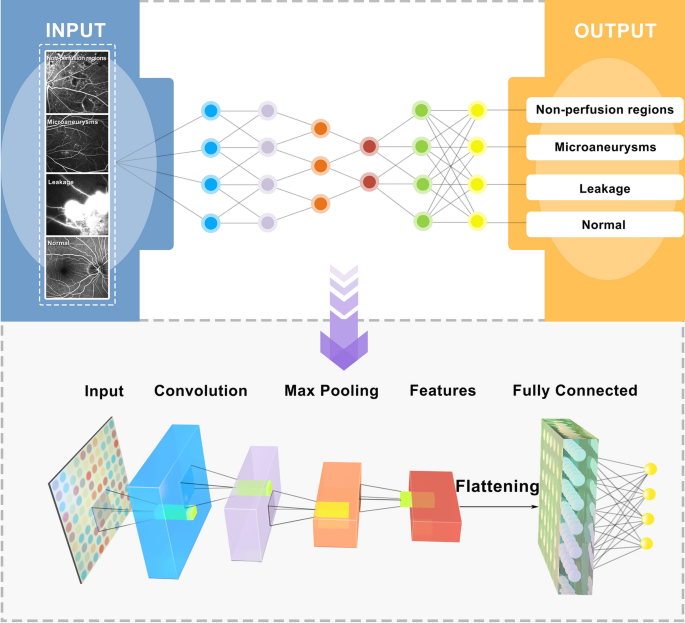

classification to increase the robustness of the algorithm. CLASSIFICATION OF LESIONS VIA CNN The characteristics and distribution of the dataset is shown in Table 1. A CNN model for

multi-label classification is constructed, which takes a single channel grayscale image as input and outputs a 4-length vector for 3 classes: (1) NP regions, (2) microaneurysms, (3) leakage.

Each dimension in the output represents whether the lesion is contained in the input image, and if none of them exists, the image is normal. The architecture of the CNN is a DenseNet with 4

dense blocks, each of them has 16 dense layers with a grow rate of 12. An overall structure of this deep learning model is represented in Fig. 1. The CNN was trained with stochastic

gradient descent (SGD) optimizer. The performance of the algorithm was evaluated by the area under the curve (AUC) of receiver operation characteristic curve (ROC) generated by plotting

sensitivity (true positive rate) vs specificity (true negative rate). AUTOMATIC SEGMENTATION OF NP REGIONS We constructed an attention U-net for NP region detection with a down-sampling path

and an up-sampling path, as shown in Fig. 2. In the down sampling path, 64*64-sized feature maps are generated by 4 max-pooling operations. Before each down-sampling, two composition blocks

containing 3*3 convolution, batch normalization (BN) and rectified linear unit (ReLU) are adopted. The final feature maps of the down sampling path are up sampled to the original image size

in 4 steps in the up-sampling path. The NP dataset has 246 images, each of them contains NP regions. It was divided into training set and test set by a ratio of 5:1 (205:41). Data

augmentation like that for FFA classification was applied. We computed the recall of proposals at different Intersection-over-Union (IoU) ratios with NP ground truth boxes. RESULTS The FFA

dataset has 3,014 images, of which 2,801 images contain microaneurysms, 1,565 contain NP regions, and 579 contain leakage. Results of prediction performance for different lesions on FFA

images are shown in Fig. 3. For each DR lesion, we can use these plots to derive the sensitivity, specificity and AUC of three CNN models. To assess the statistical sensitivity we plotted

each ROC curve 1,000 times. For DenseNet, AUC was 0.8855 for NP regions, 0.9782 for microaneurysms, and 0.9765 for leakage classifier. For ResNet 50, AUC was 0.7895 for NP regions, 08,633

for microaneurysms, and 0.9305 for leakage classifier. For VGG 16, AUC was 0.7689 for NP regions, 0.8396 for microaneurysms, and 0.9430 for leakage classifier. The sensitivity and

specificity of 3 doctor’s diagnosis are also shown in the Table 2. The outputs of NP regions are probability maps for each FFA image (Fig. 4). The first column pictures show the manually

segmented ground-truth of three ophthalmologists by three different colors. The overlap areas of the ground-truth results are shown in the second column with white color. The third column

shows the result of our algorithm’s prediction. Since the training data consisted of patches centered on the lesion of interest, the higher probability elevations in the probability map

tended to cover both the lesion of interest and the surrounding pixels. In Fig. 5, we show the results of recall-to-IoU overlap ratio on the NP regions. Precision-to-recall curve

demonstrates the accuracy of NP detection. Average precision is 0.643. DISCUSSION In this study, we have detected lesions of DME which need focal retinal photocoagulation. Excellent

performance is reported in the detection of DR containing macroscopically visible lesions on the task of single-label image classification9. However, the applicability of CNNs to multi-label

images still remains an open problem. In our study, DenseNet was first used to identify the multi-label lesions of microaneurysms, NP regions and leakage on FFA images. Multi-label

classification using deep multi-model neural networks was reported with good result of whole slide breast histopathology images and X-ray images15,16. The use of many square image patches

for training and detection with a sliding window technique has been employed in pathology of microaneurysm with success, but the limitation of MS-CNN is time efficiency17. We infer that

DenseNet might be the appropriate CNN architecture for analysis of data modal of FFA images. At the lesion level, the proposed detector outperforms heatmap generation algorithms of semantic

segmentation based on FFA images. Classical lesion detection were segmentation methods based on mathematical morphology, region growing, or pixel classification, using fundus images18,19,20.

Walid presented a framework for segmenting Choroidal neovascularization lesions based on parametric modeling of the intensity variation in FFA21. However, limited expert labeled data is a

common problem encountered. CNNs based U-net structure was used to detect retinal exudates with promising results, and it does not rely on expert knowledge or manual segmentation for

detecting relevant lesions22. Our proposed solution is a promising image mining tool, which has the potential to discover new biomarkers in images. Precision treatment of DR lesions such as

that prescribed by the ETDRS protocol requires the retina specialists locate each lesion seen on the FFA on the patient’s fundus, which is a tedious procedure with frequent retreatment

sessions23. It is important to point out that most AI-based applications in medicine focus on the diagnosis or screening and few are involved with treatment. The NAVILAS system reported the

first retinal navigating laser photocoagulation system in the treatment of DR, which is manually done offline with plentiful time24. The navigated laser in a randomized clinical trial

resulted in significantly fewer injections and yielded visual and anatomic gains comparable to monthly dosing at 2 years25. In the clinical setting, we avoid the large vessels of the retina

and shift the location of photocoagulation, when we perform the laser photocoagulation with the same spacing. We detect the defining lesions with an accuracy of 0.643 using computer-aided

system, and plan the aiming laser spot on the retina. There are limitations to this study. Annotating lesions on FFA images is costly, learning from the weakly supervised data helps to

reduce the need for large-scale labeled training data in deep learning. Some NP Annotation task is also difficult for a human doctor as well. The FFA images and fundus images with same

fields of view were manually registered, automatic registration will be done in the future. Our system helps clinical ophthalmologists make laser treatment decisions for diabetic

retinopathy, the accuracy and safety should be evaluated by clinical trials in the future. Although AI has got a series of achievements in ophthalmology, it still remains great challenges

for the application in assisting doctors with the diagnosis and treatment of retinal diseases. Our algorithm can assist doctors reading the FFA images and guiding the laser treatment, maybe

be used as an intelligent tool for FFA analysis in the future. Through this system, doctors can receive an AI report labelling with different lesions as soon as the test is finished, which

will approve the doctors’ efficiency with the detection of abnormal findings that doctors may ignore. Moreover, the AI suggestion of laser treatment can also help junior doctors make

decisions. REFERENCES * Yau, J. W. _et al._ Global prevalence and major risk factors of diabetic retinopathy. _Diabetes Care_ 35, 556–564. https://doi.org/10.2337/dc11-1909 (2012). Article

PubMed PubMed Central Google Scholar * Ting, D. S. W. _et al._ Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images

from multiethnic populations with diabetes. _JAMA_ 318, 2211–2223. https://doi.org/10.1001/jama.2017.18152 (2017). Article PubMed PubMed Central Google Scholar * Tufail, A. _et al._

Automated diabetic retinopathy image assessment software: diagnostic accuracy and cost-effectiveness compared with human graders. _Ophthalmology_ 124, 343–351.

https://doi.org/10.1016/j.ophtha.2016.11.014 (2017). Article PubMed Google Scholar * Early Treatment Diabetic Retinopathy Study Research Group. Early photocoagulation for diabetic

retinopathy: ETDRS report number 9. _Ophthalmology_ 98, 766–785 (1991). Article Google Scholar * Elman, M. J. _et al._ Expanded 2-year follow-up of ranibizumab plus prompt or deferred

laser or triamcinolone plus prompt laser for diabetic macular edema. _Ophthalmology_ 118, 609–614. https://doi.org/10.1016/j.ophtha.2010.12.033 (2011). Article PubMed PubMed Central

Google Scholar * Elman, M. J. _et al._ Intravitreal ranibizumab for diabetic macular edema with prompt versus deferred laser treatment: three-year randomized trial results. _Ophthalmology_

119, 2312–2318. https://doi.org/10.1016/j.ophtha.2012.08.022 (2012). Article PubMed Google Scholar * Silva, P. S. _et al._ Diabetic retinopathy severity and peripheral lesions are

associated with nonperfusion on ultrawide field angiography. _Ophthalmology_ 122, 2465–2472. https://doi.org/10.1016/j.ophtha.2015.07.034 (2015). Article PubMed Google Scholar * Zheng, Y.

_et al._ Automated segmentation of foveal avascular zone in fundus fluorescein angiography. _Invest. Ophthalmol. Vis. Sci._ 51, 3653–3659. https://doi.org/10.1167/iovs.09-4935 (2010).

Article PubMed Google Scholar * Lam, C., Yu, C., Huang, L. & Rubin, D. Retinal lesion detection with deep learning using image patches. _Invest. Ophthalmol. Vis. Sci._ 59, 590–596.

https://doi.org/10.1167/iovs.17-22721 (2018). Article PubMed PubMed Central Google Scholar * Rasta, S. H., Nikfarjam, S. & Javadzadeh, A. Detection of retinal capillary nonperfusion

in fundus fluorescein angiogram of diabetic retinopathy. _BioImpacts BI_ 5, 183–190. https://doi.org/10.15171/bi.2015.27 (2015). Article PubMed PubMed Central Google Scholar * Zhang, X.

H., Chutatape, O. & IEEE. In: _ICIP: 2004 International Conference on Image Processing, Volumes 1–5 IEEE International Conference on Image Processing (ICIP)_ 139–142 (2004). * Abramoff,

M. D. _et al._ Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. _Invest. Ophthalmol. Vis. Sci._ 57, 5200–5206.

https://doi.org/10.1167/iovs.16-19964 (2016). Article PubMed Google Scholar * Zheng, Y., Kwong, M. T., Maccormick, I. J., Beare, N. A. & Harding, S. P. A comprehensive texture

segmentation framework for segmentation of capillary non-perfusion regions in fundus fluorescein angiograms. _PLoS ONE_ 9, e93624. https://doi.org/10.1371/journal.pone.0093624 (2014).

Article ADS CAS PubMed PubMed Central Google Scholar * Zhou, M., Jin, K., Wang, S., Ye, J. & Qian, D. Color retinal image enhancement based on luminosity and contrast adjustment.

_IEEE Trans. Bio Med. Eng._ 65, 521–527. https://doi.org/10.1109/tbme.2017.2700627 (2018). Article ADS Google Scholar * Mercan, C. _et al._ Multi-instance multi-label learning for

multi-class classification of whole slide breast histopathology images. _IEEE Trans. Med. Imaging_ 37, 316–325. https://doi.org/10.1109/tmi.2017.2758580 (2018). Article PubMed Google

Scholar * Baltruschat, I. M. & Nickisch, H. Comparison of deep learning approaches for multi-label chest X-ray classification. _Sci. Rep._ 9, 6381.

https://doi.org/10.1038/s41598-019-42294-8 (2019). Article ADS CAS PubMed PubMed Central Google Scholar * Cao, W., Czarnek, N., Shan, J. & Li, L. Microaneurysm detection using

principal component analysis and machine learning methods. _IEEE Trans. Nanobiosci._ 17, 191–198. https://doi.org/10.1109/tnb.2018.2840084 (2018). Article Google Scholar * Niemeijer, M.,

van Ginneken, B., Staal, J., Suttorp-Schulten, M. S. & Abramoff, M. D. Automatic detection of red lesions in digital color fundus photographs. _IEEE Trans. Med. Imaging_ 24, 584–592.

https://doi.org/10.1109/tmi.2005.843738 (2005). Article PubMed Google Scholar * Niemeijer, M., van Ginneken, B., Russell, S. R., Suttorp-Schulten, M. S. & Abramoff, M. D. Automated

detection and differentiation of drusen, exudates, and cotton-wool spots in digital color fundus photographs for diabetic retinopathy diagnosis. _Invest. Ophthalmol. Vis. Sci._ 48,

2260–2267. https://doi.org/10.1167/iovs.06-0996 (2007). Article PubMed PubMed Central Google Scholar * Sanchez, C. I. _et al._ A novel automatic image processing algorithm for detection

of hard exudates based on retinal image analysis. _Med. Eng. Phys._ 30, 350–357. https://doi.org/10.1016/j.medengphy.2007.04.010 (2008). Article PubMed Google Scholar * Abdelmoula, W. M.,

Shah, S. M. & Fahmy, A. S. Segmentation of choroidal neovascularization in fundus fluorescein angiograms. _IEEE Trans. Bio Med. Eng._ 60, 1439–1445.

https://doi.org/10.1109/tbme.2013.2237906 (2013). Article Google Scholar * Zheng, R. _et al._ Detection of exudates in fundus photographs with imbalanced learning using conditional

generative adversarial network. _Biomed. Opt. Express_ 9, 4863–4878. https://doi.org/10.1364/boe.9.004863 (2018). Article ADS PubMed PubMed Central Google Scholar * Bamroongsuk, P., Yi,

Q., Harper, C. A. & McCarty, D. Delivery of photocoagulation treatment for diabetic retinopathy at a large Australian ophthalmic hospital: comparisons with national clinical practice

guidelines. _Clin. Exp. Ophthalmol._ 30, 115–119 (2002). Article Google Scholar * Kozak, I. _et al._ Clinical evaluation and treatment accuracy in diabetic macular edema using navigated

laser photocoagulator NAVILAS. _Ophthalmology_ 118, 1119–1124. https://doi.org/10.1016/j.ophtha.2010.10.007 (2011). Article PubMed Google Scholar * Payne, J. F. _et al._ Randomized trial

of treat and extend ranibizumab with and without navigated laser versus monthly dosing for DME: TREX-DME 2 year outcomes. _Am. J. Ophthalmol._ https://doi.org/10.1016/j.ajo.2019.02.005

(2019). Article PubMed Google Scholar Download references ACKNOWLEDGEMENTS This work was financially supported by Zhejiang Provincial Key Research and Development Plan (Grant Number

2019C03020), the National Key Research and Development Program of China (2019YFC0118401), the Natural Science Foundation of China (Grant Number 81670888). AUTHOR INFORMATION Author notes *

These authors contributed equally: Kai Jin and Xiangji Pan. AUTHORS AND AFFILIATIONS * Department of Ophthalmology, The Second Affiliated Hospital of Zhejiang University, College of

Medicine, Hangzhou, 310009, China Kai Jin, Xiangji Pan, Zhifang Liu, Jing Cao, Lixia Lou, Yufeng Xu, Zhaoan Su, Ke Yao & Juan Ye * College of Computer Science and Technology, Zhejiang

University, Hangzhou, 310027, China Kun You & Jian Wu Authors * Kai Jin View author publications You can also search for this author inPubMed Google Scholar * Xiangji Pan View author

publications You can also search for this author inPubMed Google Scholar * Kun You View author publications You can also search for this author inPubMed Google Scholar * Jian Wu View author

publications You can also search for this author inPubMed Google Scholar * Zhifang Liu View author publications You can also search for this author inPubMed Google Scholar * Jing Cao View

author publications You can also search for this author inPubMed Google Scholar * Lixia Lou View author publications You can also search for this author inPubMed Google Scholar * Yufeng Xu

View author publications You can also search for this author inPubMed Google Scholar * Zhaoan Su View author publications You can also search for this author inPubMed Google Scholar * Ke Yao

View author publications You can also search for this author inPubMed Google Scholar * Juan Ye View author publications You can also search for this author inPubMed Google Scholar

CONTRIBUTIONS K.J., X.P., and K.Y. conceived and designed the experiments. Z.L., J.C., L.L., and Y.X. collected and processed the data. J.W., Z.S., KE.Y., and J.Y. analyzed the results. All

authors reviewed the manuscript. CORRESPONDING AUTHOR Correspondence to Juan Ye. ETHICS DECLARATIONS COMPETING INTERESTS The authors declare no competing interests. ADDITIONAL INFORMATION

PUBLISHER'S NOTE Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. RIGHTS AND PERMISSIONS OPEN ACCESS This article

is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give

appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in

this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's

Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Jin, K., Pan, X., You, K. _et al._ Automatic

detection of non-perfusion areas in diabetic macular edema from fundus fluorescein angiography for decision making using deep learning. _Sci Rep_ 10, 15138 (2020).

https://doi.org/10.1038/s41598-020-71622-6 Download citation * Received: 30 March 2020 * Accepted: 30 July 2020 * Published: 15 September 2020 * DOI:

https://doi.org/10.1038/s41598-020-71622-6 SHARE THIS ARTICLE Anyone you share the following link with will be able to read this content: Get shareable link Sorry, a shareable link is not

currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing initiative